Dapper is a simple object mapper, a nuget package that extends the IDbConnection interface. This powerful package come in handy when writing simple CRUD operations. The thing I struggle from time to time is handling big data with Dapper. When handling hundreds of thousands of objects at once brings a whole variety of performance problems you might run into. I’ll show you today how to handle many inserts with Dapper.

The problem

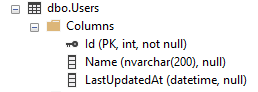

Let’s have a simple repository, that inserts users into DB. Table in DB will look like this:

Now let’s have a look at the code:

public async Task InsertMany(IEnumerable<string> userNames)

{

using (var connection = new SqlConnection(ConnectionString))

{

await connection.ExecuteAsync(

"INSERT INTO [Users] (Name, LastUpdatedAt) VALUES (@Name, getdate())",

userNames.Select(u => new { Name = u })).ConfigureAwait(false);

}

}

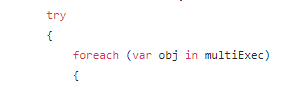

Very simple code, that takes user names and passes a collection of objects to Dapper extension method ExecuteAsync. This is a wonderful shortcut, that instead of one object, you can pass a collection and have this sql run for every object. No need to write a loop for that! But how this is done in Dapper? Lucky for us, Dapper code is open and available on GitHub. In SqlMapper.Async.cs on line 590 you will see:

There is a loop inside the code. Fine, nothing wrong with that… as long as you don’t need to work with big data. With this approach, you end up having a call to DB for every object in the list. We can do it better.

What if we could…

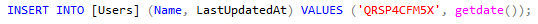

What if we could merge multiple insert sqls into one big sql? This brilliant idea gave me my colleague, Miron. Thanks, bro!:) So instead of having:

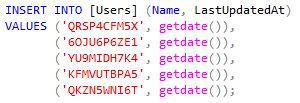

We can have:

The limit here is 1000, cause SQL server does not allow to set more values in one insert command. Code gets a bit more complicated, cause we need to create separate sqls for every 1000 users.

public async Task InsertInBulk(IList<string> userNames)

{

var sqls = GetSqlsInBatches(userNames);

using (var connection = new SqlConnection(ConnectionString))

{

foreach (var sql in sqls)

{

await connection.ExecuteAsync(sql);

}

}

}

private IList<string> GetSqlsInBatches(IList<string> userNames)

{

var insertSql = "INSERT INTO [Users] (Name, LastUpdatedAt) VALUES ";

var valuesSql = "('{0}', getdate())";

var batchSize = 1000;

var sqlsToExecute = new List<string>();

var numberOfBatches = (int)Math.Ceiling((double)userNames.Count / batchSize);

for (int i = 0; i < numberOfBatches; i++)

{

var userToInsert = userNames.Skip(i * batchSize).Take(batchSize);

var valuesToInsert = userToInsert.Select(u => string.Format(valuesSql, u));

sqlsToExecute.Add(insertSql + string.Join(',', valuesToInsert));

}

return sqlsToExecute;

}

Lets compare!

Code is nice and tidy, but is it faster? To check it I uesd a local database and a simple users name generator. It’s just a random, 10 character string.

public async Task<JsonResult> InsertInBulk(int? number = 100)

{

var userNames = new List<string>();

for (int i = 0; i < number; i++)

{

userNames.Add(RandomString(10));

}

var stopwatch = new Stopwatch();

stopwatch.Start();

await _usersRepository.InsertInBulk(userNames);

stopwatch.Stop();

return Json(

new

{

users = number,

time = stopwatch.Elapsed

});

}

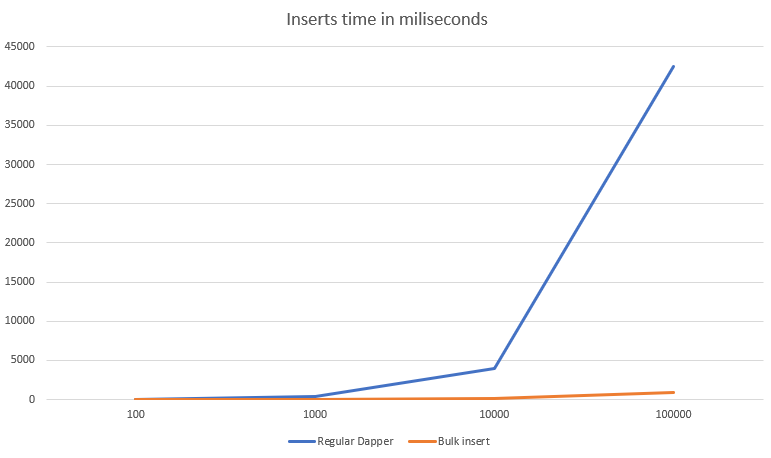

I tested this code for 100, 1000, 10k and 100k. Results surprised me.

The more users I added, the best performance gain I got. For 10k users it 42x and for 100k users it’s 48x improvement in performance. This is awesome!

It’s not safe

Immediately after posting this article, I got comments from you, that this code is not safe. Joining raw strings like that in a SQL statement is a major security flaw, cause it’s exposed for SQL injection. And that is something we need to take care of. So I came up with the code, that Nicholas Paldino suggested in his comment. I used DynamicParameters to pass values to my sql statement.

public async Task SafeInsertMany(IEnumerable<string> userNames)

{

using (var connection = new SqlConnection(ConnectionString))

{

var parameters = userNames.Select(u =>

{

var tempParams = new DynamicParameters();

tempParams.Add("@Name", u, DbType.String, ParameterDirection.Input);

return tempParams;

});

await connection.ExecuteAsync(

"INSERT INTO [Users] (Name, LastUpdatedAt) VALUES (@Name, getdate())",

parameters).ConfigureAwait(false);

}

}

This code works fine, however it’s performance is comparable to regular approach. So it is not really a way to insert big amounts of data. An ideal way to go here is to use SQL Bulk Copy and forget about Dapper.

![]() All code posted here you can find on my GitHub: https://github.com/mikuam/Blog

All code posted here you can find on my GitHub: https://github.com/mikuam/Blog

I know that there is a commercial Dapper extension, that helps with bulk operations. You can have a look here. But wouldn’t it be nice, to have a free nuget package for it? What do you think?