I want to make 1000 requests! How can I make it really fast? Let’s have a look at 4 approaches and compare their speed.

Preparations

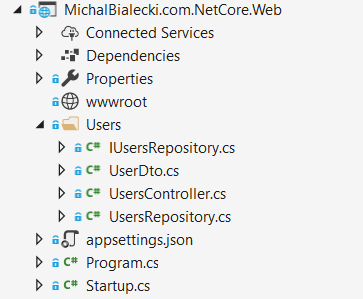

In order to test different methods of handling requests, I created a very simple ASP.Net Core API, that return user by his id. It fetches them from plain old MSSQL database.

I deployed it quickly to Azure using App services and it was ready for testing in less than two hours. It’s amazing how quickly a .net core app can be deployed and tested in a real hosting environment. I was also able to debug it remotely and check it’s work in Application Insights.

Here is my post on how to build an app and deploy it to Azure: https://www.michalbialecki.com/2017/12/21/sending-a-azure-service-bus-message-in-asp-net-core/

And a post about custom data source in Application Insights: https://www.michalbialecki.com/2017/09/03/custom-data-source-in-application-insights/

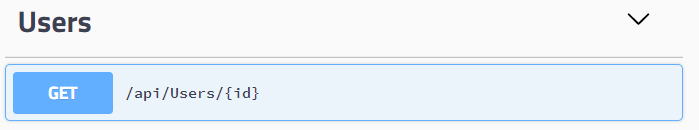

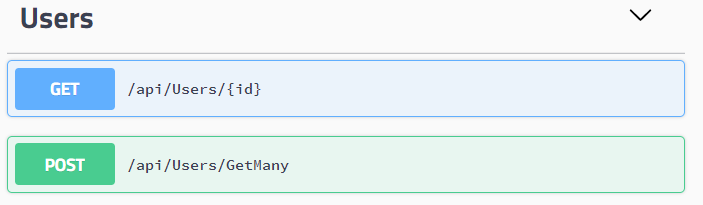

API in a swagger looks like this:

So the task here is to write a method, that would call this endpoint and fetch 1000 users by their ids as fast as possible.

I wrapped a single call in a UsersClient class:

public class UsersClient

{

private HttpClient client;

public UsersClient()

{

client = new HttpClient();

}

public async Task<UserDto> GetUser(int id)

{

var response = await client.GetAsync(

"http://michalbialeckicomnetcoreweb20180417060938.azurewebsites.net/api/users/" + id)

.ConfigureAwait(false);

var user = JsonConvert.DeserializeObject<UserDto>(await response.Content.ReadAsStringAsync());

return user;

}

}

#1 Let’s use asynchronous programming

Asynchronous programming in C# is very simple, you just use async / await keywords in your methods and magic happens.

public async Task<IEnumerable<UserDto>> GetUsersSynchrnously(IEnumerable<int> userIds)

{

var users = new List<UserDto>();

foreach (var id in userIds)

{

users.Add(await client.GetUser(id));

}

return users;

}

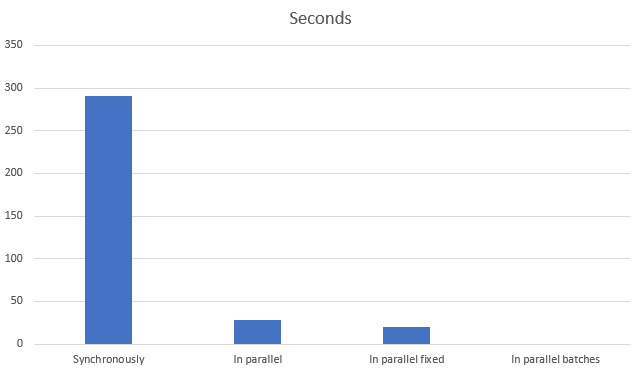

Score: 4 minutes 51 seconds

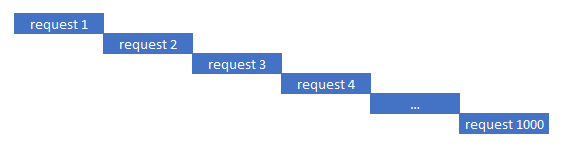

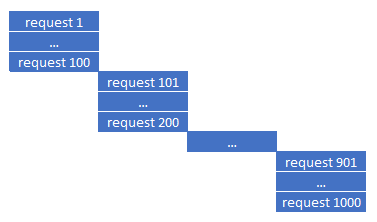

This is because although it is asynchronous programming, it doesn’t mean requests are done in parallel. Asynchronous means requests will not block the main thread, that can go further with the execution. If you look at how requests are executed in time, you will see something like this:

Let’s run requests in parallel

Running in parallel is the key here because you can make many requests and use the same time that one request takes. The code can look like this:

public async Task<IEnumerable<UserDto>> GetUsersInParallel(IEnumerable<int> userIds)

{

var tasks = userIds.Select(id => client.GetUser(id));

var users = await Task.WhenAll(tasks);

return users;

}

WhenAll is a beautiful creation that waits for tasks with the same type and returns a list of results. A drawback here would be an exception handling because when something goes wrong you will get an AggregatedException with possibly multiple exceptions, but you would not know which task caused it.

Score: 28 seconds

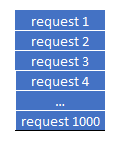

This is way better than before, but it’s not impressive. The thing that slows down the process is thread handling. Executing 1000 requests at the same time will try to create or utilize 1000 threads and managing them is a cost. Timeline looks like this:

Let’s run requests in parallel, but smarter

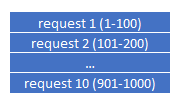

The idea here is to do parallel requests, but not all at the same time. Let’s do it batches for 100.

public async Task<IEnumerable<UserDto>> GetUsersInParallelFixed(IEnumerable<int> userIds)

{

var users = new List<UserDto>();

var batchSize = 100;

int numberOfBatches = (int)Math.Ceiling((double)userIds.Count() / batchSize);

for(int i = 0; i < numberOfBatches; i++)

{

var currentIds = userIds.Skip(i * batchSize).Take(batchSize);

var tasks = currentIds.Select(id => client.GetUser(id));

users.AddRange(await Task.WhenAll(tasks));

}

return users;

}

Score: 20 seconds

This is the slightly better result because framework needs to handle fewer threads at the same time and therefore it is more effective. You can manipulate the batch size and figure out what is best for you. Timeline looks like this:

The proper solution

The proper solution needs some modifications in the API. You won’t always have the ability to change the API you are calling, but only changes on both sides can get you even further. It is not effective to fetch users one by one when we need to fetch thousands of them. To further enhance performance we need to create a specific endpoint for our use. In this case – fetching many users at once. Now swagger looks like this:

and code for fetching users:

public async Task<IEnumerable<UserDto>> GetUsers(IEnumerable<int> ids)

{

var response = await client

.PostAsync(

"http://michalbialeckicomnetcoreweb20180417060938.azurewebsites.net/api/users/GetMany",

new StringContent(JsonConvert.SerializeObject(ids), Encoding.UTF8, "application/json"))

.ConfigureAwait(false);

var users = JsonConvert.DeserializeObject<IEnumerable<UserDto>>(await response.Content.ReadAsStringAsync());

return users;

}

Notice that endpoint for getting multiple users is a POST. This is because payload we send can be big and might not fit in a query string, so it is a good practice to use POST in such a case.

Code that would fetch users in batches in parallel looks like this:

public async Task<IEnumerable<UserDto>> GetUsersInParallelInWithBatches(IEnumerable<int> userIds)

{

var tasks = new List<Task<IEnumerable<UserDto>>>();

var batchSize = 100;

int numberOfBatches = (int)Math.Ceiling((double)userIds.Count() / batchSize);

for (int i = 0; i < numberOfBatches; i++)

{

var currentIds = userIds.Skip(i * batchSize).Take(batchSize);

tasks.Add(client.GetUsers(currentIds));

}

return (await Task.WhenAll(tasks)).SelectMany(u => u);

}

Score: 0,38 seconds

Yes, less than one second! On a timeline it looks like this:

Comparing to other methods on a chart, it’s not even there:

How to optimize your requests

Have in mind, that every case is different and what works for one service, does not necessarily need to work with the next one. Try different things and approaches, find methods to measure your efforts.

Here are a few tips from me:

- Remember that the biggest cost is not processor cycles, but rather IO operations. This includes SQL queries, network operations, message handling. Find improvements there.

- Don’t start with parallel processing in the beginning as it brings complexity. Try to optimize your service by using hashsets or dictionaries instead of lists

- Use smallest Dtos possible, serialize only those fields you actually use

- Implement an endpoint suited to your needs

- Use caching if applicable

- Try different serializers instead of Json, for example ProfoBuf

- When it is still not enough… – try different architecture, like push model architecture or maybe actor-model programming, like Microsoft Orleans: https://www.michalbialecki.com/2018/03/05/getting-started-microsoft-orleans/

You can find all code posted here in my github repo: https://github.com/mikuam/Blog.

Optimize and enjoy 🙂

You may want to check out ServicePointManager.DefaultConnectionLimit, since your requests are to the same point they are most likely only 2 at a time actually running. This is most likely the reason for ~20s to run them, not the thread and context switches because those usually take microseconds. That’s assuming your api can handle many concurrent requests.

Hey, thanks! I didn’t know about this one. I checked and I haven’t noticed any big improvements. I dig a bit and it turns out it is a setting in full framework, but in .Net Core there’s another one – HttpClientHandler.MaxConnectionsPerServer, that is already set to int.MaxValue.

https://blogs.msdn.microsoft.com/timomta/2017/10/23/controlling-the-number-of-outgoing-connections-from-httpclient-net-core-or-full-framework/

Great post! A lot of usefull information written very clearly. Easy to read and understand. Thx!

“… A drawback here would be an exception handling because when something goes wrong you will get an AggregatedException with possibly multiple exceptions, but you would not know which task caused it…”

Actually ‘Task.WhenAll()’ won’t return the AggregateException (you can read here: https://stackoverflow.com/questions/12007781/why-doesnt-await-on-task-whenall-throw-an-aggregateexception and here https://codeblog.jonskeet.uk/2011/06/22/eduasync-part-11-more-sophisticated-but-lossy-exception-handling/). Short explanation is that:

“…Now the team in Microsoft could have decided that really you should catch AggregateException and iterate over all the exceptions contained inside the exception, handling each of them separately. (…) They decided to simply extract the first exception from the AggregateException within a task, and throw that instead…”

So the output of the ‘…await Task.WhenAll()…” will be basic Exception (first task which failed). The way to handle exceptions from other task can be, eg.:

“…public static async Task AwaitGracefully(this IList taskList, Action logger)

{

try

{

await Task.WhenAll(taskList);

}

catch (Exception)

{

foreach (var task in taskList.Where(t => t.IsFaulted))

{

logger(task.Exception?.InnerException ?? task.Exception);

}

}

}

…”

I am really impressed with your writing skills and also with

the layout on your blog. Is this a paid theme or did you customize it yourself?

Either way keep up the excellent quality writing, it’s rare to see a nice blog like this one these days.

Hello, would it be possible to add the code of the visual studio project on your github ?

I would like to see the UserDto.cs file.

thanks for the great article.

Hi, yes the code is available on my GitHub: https://github.com/mikuam/Blog/blob/master/ServiceBusExamples/MichalBialecki.com.NetCore.Console/Users/UsersService.cs

UserDto itself is available here: https://github.com/mikuam/Blog/blob/master/ServiceBusExamples/MichalBialecki.com.NetCore.Web/Users/UserDto.cs

Very great post. Thank you very much

In your last example you modify your Api to support return list of users instead returning single user. Actually this is not our concern in this case because we might don’t access to modify Api, It maybe another vendor Api and we can not access it.

Saeid,

That’s correct. In this example, I wanted to show, that speeding up communication between micro-services can be done on one side just to a certain point. In order to go a step forward, both sides need to be involved.

Thanks for feedback 🙂

In the case of parallel execution where we don’t do batches, and send 1000 requests in parallel, do we have a guarantee do receive responses in the same order we sent the requests?

Hi, thanks for the question. The answer is yes, they will be returned in the same order. Have a look at this question on StackOverflow: https://stackoverflow.com/questions/23175611/task-whenall-result-ordering

Hello,

Thank you very much for your article, it helps me a lot.

I’ve implemented the “Let’s run requests in parallel, but smarter”, but I have a problem :

Here is my code :

var tasks = currentIds.Select(product => client.PutAsync(“url”, “json”, Encoding.UTF8, “application/json”)));

response = await Task.WhenAll(tasks);

But I do need associate each response to each product, I co not arrived to do this.

Actually I’m just having a list of response, but I do not know which product is concerned for each response.

Thank you

Hi Peter,

responses will be returned in the same order, so you can associate them with products from the request. Have a look at this question on StackOverflow: https://stackoverflow.com/questions/23175611/task-whenall-result-ordering

In asp net core u don’t have to use ConfigureAwait(false) – it is pointless …

Maciej,

It’s a very good point. However, the full answer would have a small ‘but’. In ASP.Net Core there’s no `SynchronizationContext`, so there’s no point in using `ConfigureAwait(false)`, however, it is advised to use when writing a library, that could be used in old ASP.Net.

Have a look here for more info.

Thanks very much, I’ve modified your code a bit to make it a generic extension and added an optional delay in between batches.

Thanks very much, I’ve modified your code a bit to make it a generic extension and added an optional delay in between batches.

“`c#

public static class TaskExtentions

{

// https://www.michalbialecki.com/2018/04/19/how-to-send-many-requests-in-parallel-in-asp-net-core/

public async static Task<IEnumerable> WhenAllInBatches(this Task task, IEnumerable<Task> tasks, int batchSize, int? batchPauseMiliSeconds = null )

{

var results = new List();

int numberOfBatches = (int)Math.Ceiling((double)tasks.Count() / batchSize);

for (int i = 0; i < numberOfBatches; i++)

{

var currentIds = tasks.Skip(i * batchSize).Take(batchSize);

results.AddRange(await Task.WhenAll(tasks));

if (batchPauseMiliSeconds.HasValue) await Task.Delay(batchPauseMiliSeconds.Value);

}

return results;

}

}

“`

oops should be

for (int i = 0; i < numberOfBatches; i++)

{

var currentBatch = tasks.Skip(i * batchSize).Take(batchSize);

results.AddRange(await Task.WhenAll(currentBatch));

if (batchPauseMiliSeconds.HasValue) await Task.Delay(batchPauseMiliSeconds.Value);

}

Many thanks ,

But i got errors when i tried to use this code with EntityFramework core :

System.InvalidOperationException: A second operation started on this context before a previous operation completed. This is usually caused by different threads using the same instance of DbContext. For more information on how to avoid threading issues with DbContext

Is there any workaround for this error ?

Best regards

Have you registered DbContext in IoC container? ASP.NET Core can handle DbContext class lifecycle and the best way is just to use it like this:

services.AddDbContext(options => options.UseSqlServer(Configuration.GetConnectionString("HotelDB")));

Here is the line from working project:

https://github.com/mikuam/PrimeHotel/blob/4d075b92bb4230d39860d2e0b7d2d1bee7406b96/PrimeHotel.Web/Startup.cs#L46

Hi, this is a great article, helped me a lot.

I have just one question, about the last part.

This PostAsync looks like the method is calling itself, no? Or am I missing somethig.

To me it looks like this is the method GetMany.

Is the flow this:

http://michalbialeckicomnetcoreweb20180417060938.azurewebsites.net/api/users/GetMany – this is the method that executes in paralel

And public async Task<IEnumerable> GetUsers(IEnumerable ids) – is the async method that calls the method that executes in paralel?

Somehow this looks to me like a double job.

public async Task<IEnumerable> GetUsers(IEnumerable ids)

{

var response = await client

.PostAsync(

“http://michalbialeckicomnetcoreweb20180417060938.azurewebsites.net/api/users/GetMany”,

new StringContent(JsonConvert.SerializeObject(ids), Encoding.UTF8, “application/json”))

Great article!

Just one qustion: how do you choose the right batchSize ? Why is it 100, and not 200?

Thanks Franco,

The best batchSize will be different in every application. You need to test and check what would be the right value for you. 100 is just an example that worked for me.

Nice one!

I, also, have some question. I have to call some SOAP service around 250k times. When I set batchSize to, for example 100, I got all kinfs of errors, something like: “Authentication failed because the remote party has closed the transport stream…”, or “An error occurred while sending the request. The response ended prematurely…” and so on..

If I set batchSize to some small number like 10, everything is fine, but it is slow.

What is the best approach in that kind of situation?

You’re probably being throttled by the service you are calling. It is something that wasn’t mentioned in this post. Making a ton of calls simultaneously to a service is unfeasible in many scenarios, since some services are not able to handle the load. You would have to either implement a retry mechanism or lower the batch size to something that the service can handle.

What i think is that the 3rd option will still spam the sh#t out of the serivce, so you are left with the 2nd option, which batches in intervals.

Hi Michał ,

This is really good article. Thanks for sharing your knowledge.

I have just begun to work or parallel request and async programming and have some doubts.

1. If I have a service to return just one user from ID, and I have multiple concurrent request coming to the server for the same API. what will be the best approach to handle multiple concurrent requests along with async and await ?

2. Is it advisable to use parallel for with webapi to utilize all the cores of the processor, when I again have multiple request coming on that API?

Hi Sachin,

I’m not very familiar with the case you are describing, but I’ll try to answer as well as I can.

1. Web API in .NET Core works very well with many requests in parallel. Even if running the same code, it can be run by multiple threads at the same time. If you use async/await, which is a good practice your code will be even more parallel friendly. With async/await the processing of a request can switch from a thread to thread, so they are used more optimal.

2. The ability to work in parallel depends on the common parts of the service. If you’re calling the same database and reusing the same db connection, this is something that requests will use one by one. However, writing API so it can work in parallel is a natural thing so you should only consider modification when there is a performance issue.

Very good article. I am a newbie in programming from Vietnamese. I have a question .how I can post 1000 concurrent requests. thank you

great but i have a question when one of request has failed i want to do to next one

like try

{}

catch(){

continue

}

Great efforts Michał, really appreciate your efforts. You have explained in a very simple manner, anyone can understand it. Lucky to have come across this blog 🙂

I have one doubt about the execution of the multiple APIs parallelly –

Assume, there are three Async WebAPI Calls made, each taking 2 seconds, 5 seconds and 3 seconds to execute separately. When we run them in parallel, what would be the average “response Time” for executing all the three WebAPIs asynchronously? .

Hope I was able to convey my question for you to understand.

Thanks in advance. Warm Regards

Hi Michal

I know you wrote the article a while back. Have you since tried to use Iasyncenumerable instead of batching the tasks?