Egnyte is a company, that offers secure and fast file storage in the cloud for business customers. I have written more about getting started with Egnyte API in my previous post.

Egnyte.API is a nuget package, that I’ve written in .Net and it supports:

- .Net Framework 4.5

- Windows Phone 8.1

- Xamarin

It contains support for most of the functionalities that Egnyte API offers and helps to manage:

- Files system

- Permissions

- Users

- Groups

- Search

- Links

- Audit reporting

However, if you’d like to improve it, feel free to contribute to it’s github repository.

Obtaining OAuth 2.0 token

First this is obtaining a token, that later can be used for authenticating each request. Egnyte offers three OAuth 2.0 authorization flows: Resource Owner, Authorization Code and Implicit Grant, however Authorization Code flow is the most common. To ease implementation of obtaining the token, Egnyte.API offers helpers methods for all three of those.

[HttpPost]

public void RequestTokenCode()

{

var authorizeUrl = OAuthHelper.GetAuthorizeUri(

OAuthAuthorizationFlow.Code,

Domain,

PrivateKey,

RedirecrUri);

Response.Redirect(authorizeUrl.ToString());

}

[HttpPost]

public void RequestTokenImplicitGrant()

{

var authorizeUrl = OAuthHelper.GetAuthorizeUri(

OAuthAuthorizationFlow.ImplicitGrant,

Domain,

PrivateKey,

RedirecrUri);

Response.Redirect(authorizeUrl.ToString());

}

[HttpGet]

public async Task<ActionResult> AuthorizeCode(string code)

{

var token = await EgnyteClientHelper.GetTokenFromCode(

Domain,

PrivateKey,

Secret,

RedirecrUri,

code);

return Json(JsonConvert.SerializeObject(token), JsonRequestBehavior.AllowGet);

}

After you have a token, it’s time to use it.

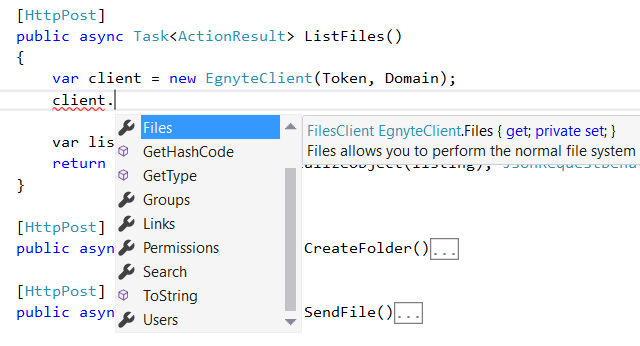

Usage and structure of Egnyte.API package

The simplest usage is just one line – creating a client.

var client = new EgnyteClient(Token, Domain);

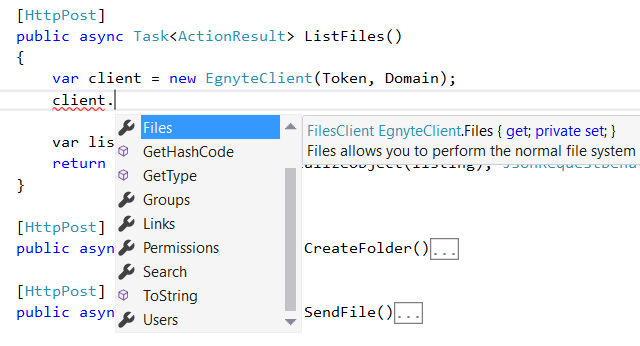

However, you can use optional parameters and pass your own HttpClient if you need to set some specific configuration. After creating a client you are ready to go. Inside a client you will find child clients that will help you use options mentioned above.

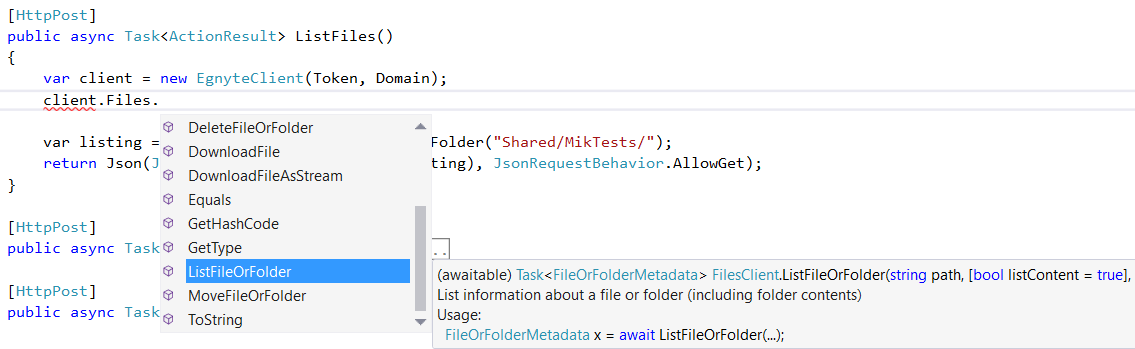

Each method is properly named and contains description of it’s usage and purpose of it’s parameters.

Sample usages

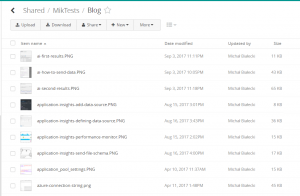

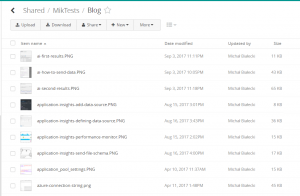

This is how folder looks like on the Egnyte web view:

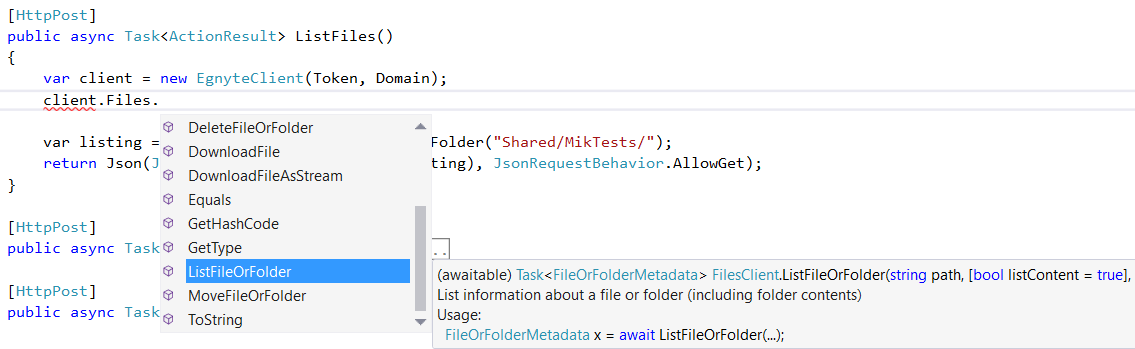

List files

Listing is super easy, code like this:

var client = new EgnyteClient(Token, Domain);

var listing = await client.Files.ListFileOrFolder("Shared/MikTests/Blog");

Returns object, that can be serialized as Json to:

{"IsFolder":true,"AsFolder":{"Count":0,"Offset":0,"TotalCount":9,"RestrictMoveDelete":false,"PublicLinks":"files_folders","Folders":[],"Files":[{"Checksum":"896e4ea21d4a0c692cc5729186ca547a9c80dd0261d22fee2ad6a65642f144712f2d61efc5b5d478b1094153d687afb26db19a10b4eabcf894c534356500cb3c","Size":11706,"Path":"/Shared/MikTests/Blog/ai-first-results.PNG","Name":"ai-first-results.PNG","Locked":false,"EntryId":"a971e61c-dc02-44f9-aba3-ad0d23ee25bd","GroupId":"692602c0-3270-41ab-9b95-f1942724c3b5","LastModified":"2017-09-03T21:11:46Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"cb3d2a1709556cfe460261eb41fe74dcd07468c9af55f352feb024d76001109f191b9b829540305b6888f1ea43d9d23b188b96ccd7f59c29a739f72a79829794","Size":43699,"Path":"/Shared/MikTests/Blog/ai-how-to-send-data.PNG","Name":"ai-how-to-send-data.PNG","Locked":false,"EntryId":"3fbd6acf-c36b-4440-ad37-4ddc5f19bc14","GroupId":"586308a7-49e8-4b54-b5e2-ae4b113330f8","LastModified":"2017-09-03T20:05:08Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"184c4f201d9e3083b5f28913b1c19a8cc14fee378fac9d2bfcfb3940c19b2a8a901da045ac1b5a1af61ffd681698d797265f25e1087b3313dc9e5cc1e8085912","Size":66954,"Path":"/Shared/MikTests/Blog/ai-second-results.PNG","Name":"ai-second-results.PNG","Locked":false,"EntryId":"1e66e057-0e52-4b5d-a289-b56922a185a4","GroupId":"d464292a-7ccd-43e2-add9-983cf342a9f5","LastModified":"2017-09-03T21:18:46Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"10004c02792a22f97d89e22a47f97bb9ebb65def491b7b66a8cda1da7560c010d971a02ec43b6148526275d72c6c4a0ffc19aa79a7f67458ef912d0d949b4d22","Size":8642,"Path":"/Shared/MikTests/Blog/application-insights-add-data-source.PNG","Name":"application-insights-add-data-source.PNG","Locked":false,"EntryId":"0ef8ee1e-3a69-41a6-b2e1-ae25d14be8fa","GroupId":"5bdea173-e195-4f0a-a5cb-7b6a51a32b87","LastModified":"2017-08-15T13:01:42Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"4acd351077e97157e8badbff49d892bbe51f80d7043272231676b753d2b1576d0d7acebb49506bf9c40fda0894e80e87c2255e4fb28381c88bd5ea309cb62fde","Size":36501,"Path":"/Shared/MikTests/Blog/application-insights-defining-data-source.PNG","Name":"application-insights-defining-data-source.PNG","Locked":false,"EntryId":"20ba9b7d-eba0-4499-9fbc-84b00ce95ac0","GroupId":"1df2518e-b123-47fb-a725-9837222b4017","LastModified":"2017-08-16T13:43:39Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"781419db9514cee18ddb92d106e35ae8dc5998b5b10987ceaec069c8791babffbc4e526d98e2a662a5176f1e9e67a627b60873d307c47009b965f48fe76570b5","Size":15410,"Path":"/Shared/MikTests/Blog/application-insights-performance-monitor.PNG","Name":"application-insights-performance-monitor.PNG","Locked":false,"EntryId":"d985893b-e414-4258-b009-6cf299800993","GroupId":"503e569b-481f-4a0a-b389-06c075713f17","LastModified":"2017-08-15T12:02:56Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"74a0f551d27ebe61b5c3b92ee0de4b1c48c60f7bbbfb0e1b9ef917c1f8ee9f7d30f0e8cd35b7579d6b40c020900db2eb8e75bc9c97855d2d49bc3e38c30146fe","Size":16935,"Path":"/Shared/MikTests/Blog/application-insights-send-file-schema.PNG","Name":"application-insights-send-file-schema.PNG","Locked":false,"EntryId":"09186e55-f629-40c7-a377-8a1a504a13e3","GroupId":"f99aef8f-e2d7-4365-9369-dbb6339038cd","LastModified":"2017-08-16T14:00:36Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"88c0c9416c2f75fc0b98d33dc240a7e771c4dd6dd7aa70342901134ef3eb90838e3de3facd73e12b8510c916e1a2c60c082cc664e77cea3572994ef774ef3e5c","Size":14911,"Path":"/Shared/MikTests/Blog/application_pool_settings.PNG","Name":"application_pool_settings.PNG","Locked":false,"EntryId":"cadab05d-8029-42cf-857c-dcedad8bb979","GroupId":"d692e745-0682-4c47-9fda-8a8675572bff","LastModified":"2017-04-10T09:37:52Z","UploadedBy":"mik","NumberOfVersions":1},{"Checksum":"b9b02a5b7b5149c50477d3fd9c14d4aebb96ceb268bfa105fa4b7507cc6306f9c5d20e67817444be39a94ef38c06d83423570a641c28506f00aa23770ade4fdf","Size":46298,"Path":"/Shared/MikTests/Blog/azure-connection-string.png","Name":"azure-connection-string.png","Locked":false,"EntryId":"5b84c1b3-03b2-4076-8eae-b23e0ff0b68f","GroupId":"d85bbf46-cb92-461a-bb69-d5fa712ddd64","LastModified":"2017-04-11T11:48:58Z","UploadedBy":"mik","NumberOfVersions":1}],"Name":"Blog","Path":"/Shared/MikTests/Blog","FolderId":"9c23d12c-5b91-40e3-bc81-4fb71fcf491a","AllowedFileLinkTypes":[],"AllowedFolderLinkTypes":[]},"AsFile":null}

Creating new folder

var listing = await client.Files.CreateFolder("Shared/MikTests/Blog/NewFolder");

Sending a file

var filePath = Server.MapPath("~/Content/myPhoto.jpg");

var stream = new MemoryStream(System.IO.File.ReadAllBytes(filePath));

var listing = await client.Files.CreateOrUpdateFile("Shared/MikTests/Blog/myPhoto.jpg", stream);

Deleting file or folder

var path = "Shared/MikTests/Blog/myPhoto.jpg";

var listing = await client.Files.DeleteFileOrFolder(path, entryId: "9355165a-e599-4148-88c5-0d3552493e2f");

Downloading file

var path = "Shared/MikTests/Blog/myPhoto.zip";

var responseStream = await client.Files.DownloadFileAsStream(path);

Creating a user

var listing = await client.Users.CreateUser(

new Users.NewUser {

UserName = "mikTest100",

ExternalId = Guid.NewGuid().ToString(),

Email = "mik.bialecki+test100@gmail.com",

FamilyName = "Michał",

GivenName = "Białecki",

Active = true,

AuthType = Users.UserAuthType.SAML_SSO,

UserType = Users.UserType.StandardUser,

IdpUserId = "mbialeckiTest100",

UserPrincipalName = "mik.bialecki+testp100@gmail.com"

});

Updating a user

var listing = await client.Users.UpdateUser(

new Users.UserUpdate

{

Id = 12824215695,

Email = "mik.bialecki+test100@gmail.com",

FamilyName = "Michał",

GivenName = "Białecki II"

});

Conclusion

As you see using Egnyte.API nuget package is very simple. I presented only some of it’s capabilities, but if you want more, feel free to contribute to a public repository: https://github.com/egnyte/egnyte-dotnet. And if you have any questions, just let me know 🙂

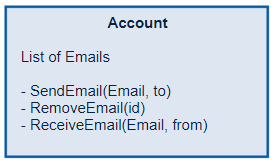

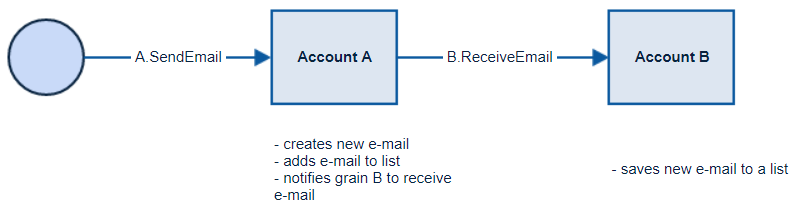

Microsoft Orleans is a developer-friendly framework for building distributed, high-scale computing applications. It does not require from developer to implement concurrency and data storage model. It requires developer to use predefined code blocks and enforces application to be build in a certain way. As a result Microsoft Orleans empowers developer with a framework with an exceptional performance.

Microsoft Orleans is a developer-friendly framework for building distributed, high-scale computing applications. It does not require from developer to implement concurrency and data storage model. It requires developer to use predefined code blocks and enforces application to be build in a certain way. As a result Microsoft Orleans empowers developer with a framework with an exceptional performance.

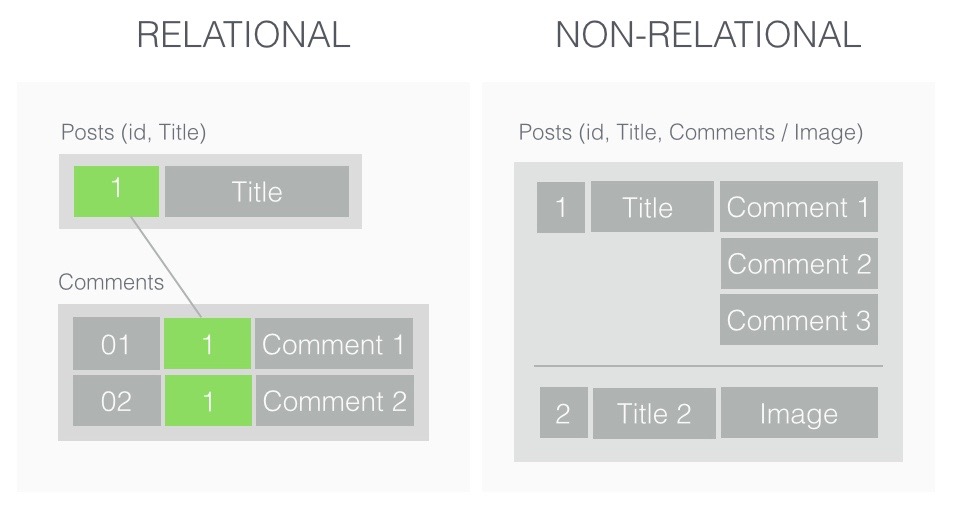

Actor model is best suited for data that is well grained, so that actors can be easily identified and their state can be easily decoupled. Accessing data by an actor is instant, because it holds it in memory and the same goes to notifying other actors. Taking that into account, Microsoft Orleans will be most beneficial where application needs to handle many small operations that changes application state. In a traditional storage, in example SQL database, application needs to handle concurrency when accessing the data, where in Orleans data are well divided. You may think that there have to be data updates that changes shared storage, but that’s a matter of changing the way the architecture is planned.

Actor model is best suited for data that is well grained, so that actors can be easily identified and their state can be easily decoupled. Accessing data by an actor is instant, because it holds it in memory and the same goes to notifying other actors. Taking that into account, Microsoft Orleans will be most beneficial where application needs to handle many small operations that changes application state. In a traditional storage, in example SQL database, application needs to handle concurrency when accessing the data, where in Orleans data are well divided. You may think that there have to be data updates that changes shared storage, but that’s a matter of changing the way the architecture is planned.

Egnyte is a company that provides software for enterprise file synchronization and sharing. Egnyte offers a cloud storage for business users to securely access and share data across the company. API offers RESTful interface, all request and responses are formated as JSON, strings are encoded as UTF-8 and all calls must be done over HTTPS.

Egnyte is a company that provides software for enterprise file synchronization and sharing. Egnyte offers a cloud storage for business users to securely access and share data across the company. API offers RESTful interface, all request and responses are formated as JSON, strings are encoded as UTF-8 and all calls must be done over HTTPS.