MS SQL Server provides functionality to quickly insert large amounts of data. It is called Bulk Copy and is performed by SqlBulkCopy class. I already compared how fast is it compared to EF Core 5 in this post: https://www.michalbialecki.com/2020/05/03/entity-framework-core-5-vs-sqlbulkcopy-2/, but this time I want to check something different – linq2db library.

What is Linq2db

Let’s check how Linq2db is described on its website:

LINQ to DB is the fastest LINQ database access library offering a simple, light, fast, and type-safe layer between your POCO objects and your database.

Sounds impressive and recently I discovered that there is an EF Core extensions package linq2db.EntityFrameworkCore, which is integrated with EF Core and enriches a DbContext with some cool features.

The most interesting are:

- Bulk copy (bulk insert)

- Fast Eager Loading (incomparable faster on massive

Includequery) - MERGE statement support

- Temporary Tables support

- Full-Text Search extensions

- and a few more

Let’s write some code

In the PrimeHotel project, I already implemented SqlBulkCopy method to insert profiles to db. First I generate profiles with Bogus library and then insert them. In this example, I’m going to use 3 methods from ProfileController:

- GenerateAndInsert – implemented with pure EF Core

- GenerateAndInsertWithSqlCopy – implemented with SqlBulkCopy class

- GenerateAndInsertWithLinq2db – implemented with Linq2db

Let me show you quickly how those 3 methods look like. The first one is GenerateAndInsert, implemented with pure Entity Framework Core 5.

[HttpPost("GenerateAndInsert")]

public async Task<IActionResult> GenerateAndInsert([FromBody] int count = 1000)

{

Stopwatch s = new Stopwatch();

s.Start();

var profiles = GenerateProfiles(count);

var gererationTime = s.Elapsed.ToString();

s.Restart();

primeDbContext.Profiles.AddRange(profiles);

var insertedCount = await primeDbContext.SaveChangesAsync();

return Ok(new {

inserted = insertedCount,

generationTime = gererationTime,

insertTime = s.Elapsed.ToString()

});

}

I use a Stopwatch class to measure how long does it take to generate profiles with the GenerateProfiles method and how long does it take to insert them.

GenerateAndInsertWithSqlCopy is implemented with SqlBulkCopy class:

[HttpPost("GenerateAndInsertWithSqlCopy")]

public async Task<IActionResult> GenerateAndInsertWithSqlCopy([FromBody] int count = 1000)

{

Stopwatch s = new Stopwatch();

s.Start();

var profiles = GenerateProfiles(count);

var gererationTime = s.Elapsed.ToString();

s.Restart();

var dt = new DataTable();

dt.Columns.Add("Id");

dt.Columns.Add("Ref");

dt.Columns.Add("Forename");

dt.Columns.Add("Surname");

dt.Columns.Add("Email");

dt.Columns.Add("TelNo");

dt.Columns.Add("DateOfBirth");

foreach (var profile in profiles)

{

dt.Rows.Add(string.Empty, profile.Ref, profile.Forename, profile.Surname, profile.Email, profile.TelNo, profile.DateOfBirth);

}

using var sqlBulk = new SqlBulkCopy(connectionString);

sqlBulk.DestinationTableName = "Profiles";

await sqlBulk.WriteToServerAsync(dt);

return Ok(new

{

inserted = dt.Rows.Count,

generationTime = gererationTime,

insertTime = s.Elapsed.ToString()

});

}

Notice that this implementation is much longer and I needed to create DataTable object, to pass my data as a table.

And finally, GenerateAndInsertWithLinq2db implementation, which uses linq2db library.

[HttpPost("GenerateAndInsertWithLinq2db")]

public async Task<IActionResult> GenerateAndInsertWithLinq2db([FromBody] int count = 1000)

{

Stopwatch s = new Stopwatch();

s.Start();

var profiles = GenerateProfiles(count);

var gererationTime = s.Elapsed.ToString();

s.Restart();

using (var db = primeDbContext.CreateLinqToDbConnection())

{

await db.BulkCopyAsync(new BulkCopyOptions { TableName = "Profiles" }, profiles);

}

return Ok(new

{

inserted = profiles.Count(),

generationTime = gererationTime,

insertTime = s.Elapsed.ToString()

});

}

This method is almost as short as EF Core one, but it creates a DataConnection with CreateLinqToDbConnection method.

The results

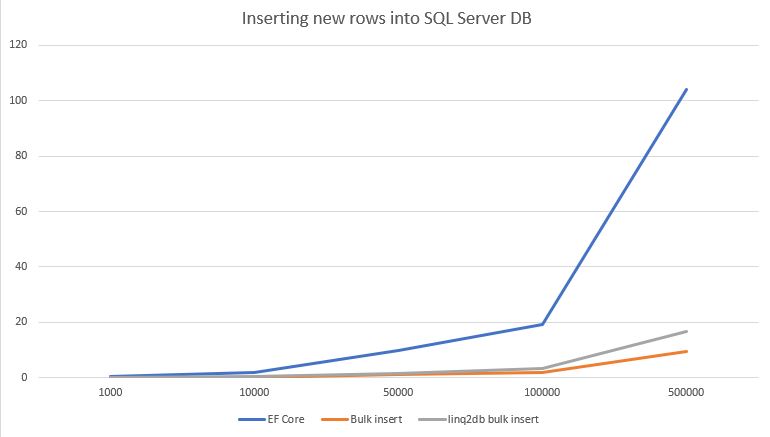

I compared those 3 methods with inserting 1k, 10k, 50k, 100k and 500k records. How fast would it be? Let’s check.

| EF Core | Bulk insert | linq2db bulk insert | |

| 1000 | 0.22 | 0.035 | 0.048 |

| 10000 | 1.96 | 0.2 | 0.318 |

| 50000 | 9.63 | 0.985 | 1.54 |

| 100000 | 19.35 | 1.79 | 3.18 |

| 500000 | 104 | 9.47 | 16.56 |

Here is a table with results in seconds. EF Core itself isn’t impressive at all, but combined with Linq2db library is almost as fast as a bulk copy.

And here is the chart, the lower value, the better.

Funny thing – while testing I noticed, that generating test data is actually slower than inserting to DB, wow 😀

The summary

Linq2db is an impressive library and already offers a lot. From GitHub, it seems that it is a well-established project with a bunch of contributors. Knowing that I’m surprised that I haven’t come across it earlier.

A bulk insert with linq2db is almost as fast as using SqlBulkCopy class but is much cleaner and shorter. It is also less error-prone and I would definitely use it in my projects.

All code posted here is available on my GitHub.

Hope it will be useful for you as well, cheers 😄

Hi,

We’re trying to use the package with MySQL and Pomelo. We have a parent -> child1 -> child2 -> child3 scenario.

We tried BulkCopyAsync but only parent is getting populated. Is there any settings required that we are missing which ensures that the child entities are populated as well.

Just add that option 2 can be achieved easily with simple code like option 1 using:

https://github.com/borisdj/EFCore.BulkExtensions

Disclaimer: I’m the author